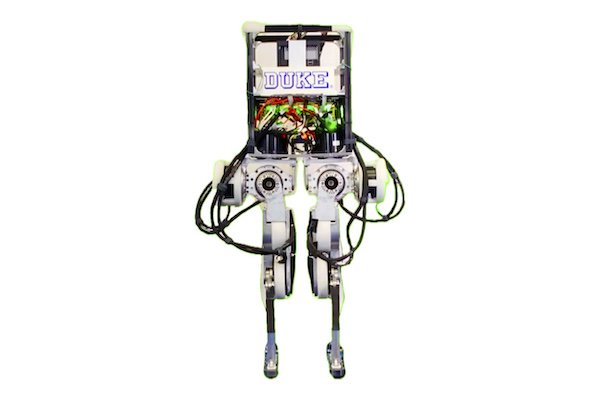

I am an Assistant Professor at Duke University where I lead the General Robotics Lab. I obtained my Ph.D. at Columbia Univesity with Hod Lipson. My research interests span across Robotics, Perception, Machine Learning, Human-AI Teaming, AI for Science, and Dynamical Systems.

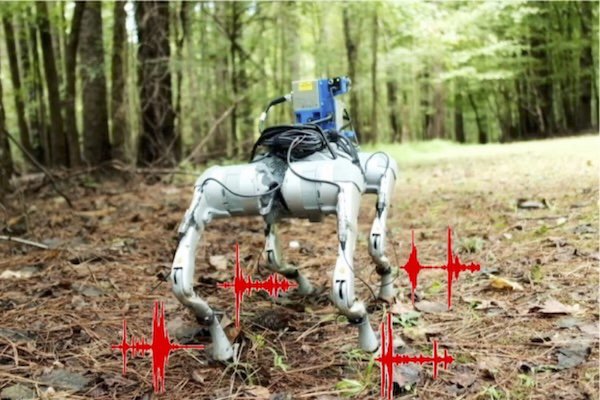

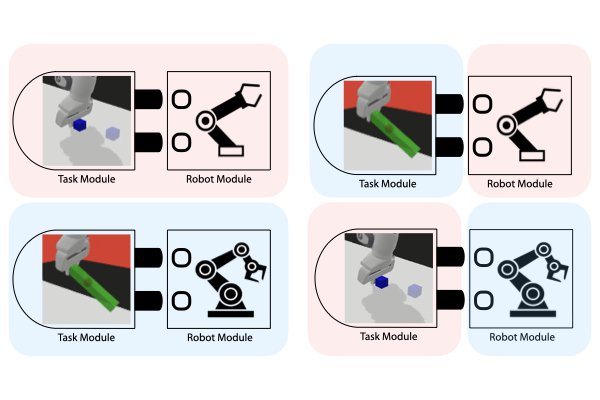

I am interested in developing "generalist robots" that learn, act and improve by perceiving and interacting with the complex and dynamic world. Ultimately, I hope that robots and machines can equip with high-level cognitive skills to assist people and unleash human creativity.

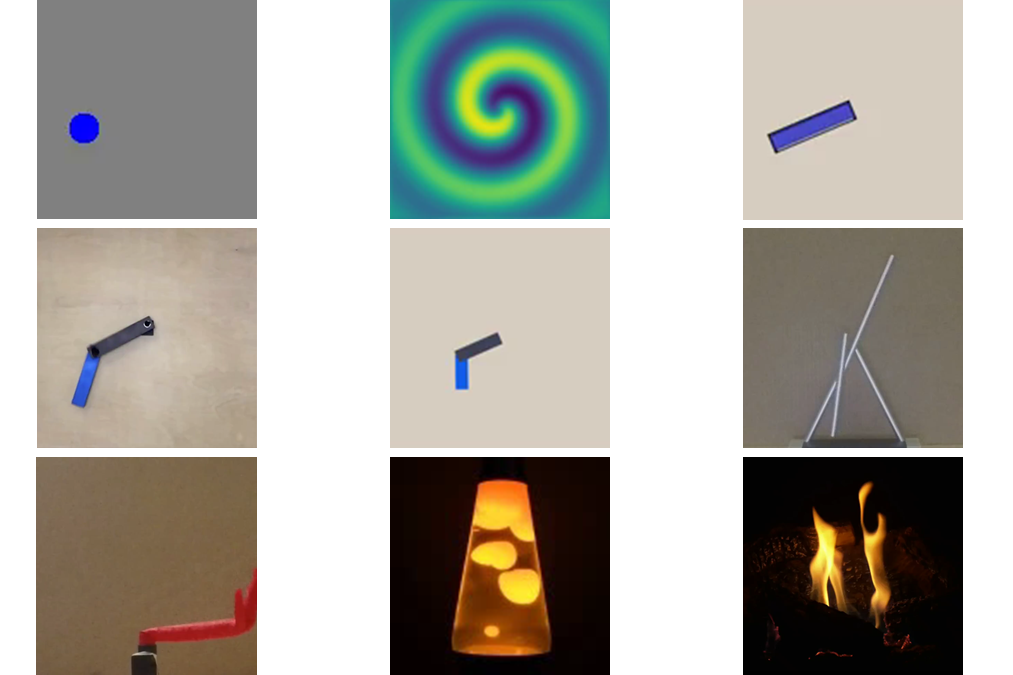

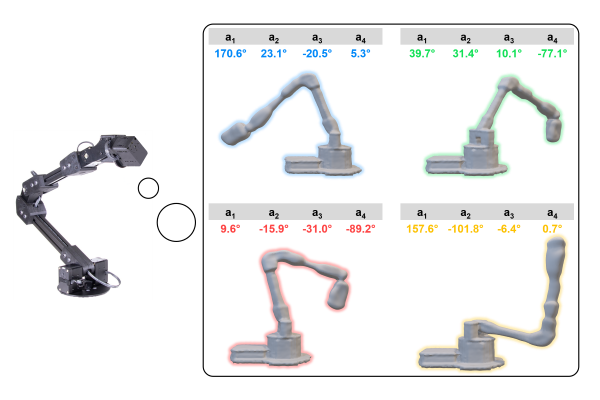

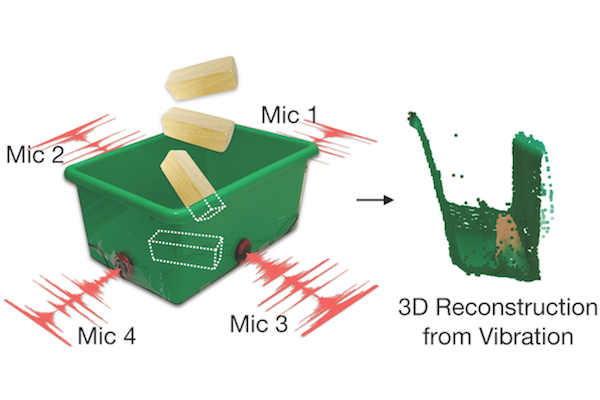

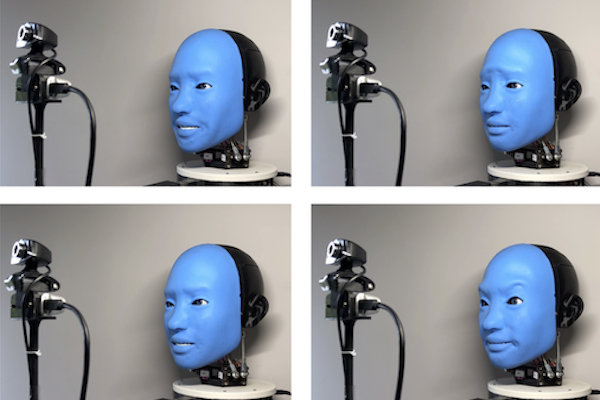

Videos

Our research is sometimes best described by brief videos and animations. Here are some of them. If you are interested in more details, please see full publication list.